Chapter 1. Introduction

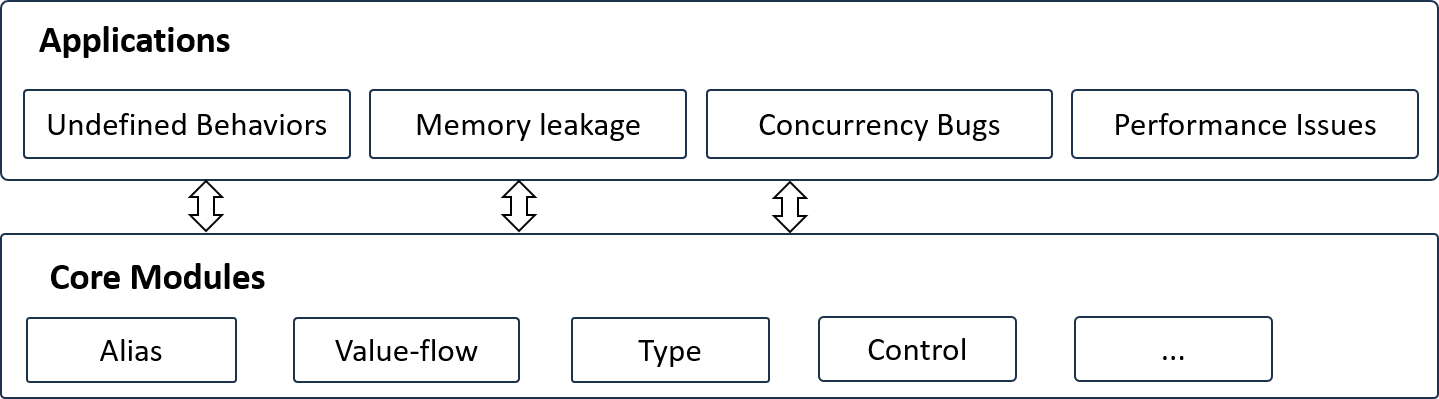

Writing program analysis tools is challenging. In this project, we aim to provide a user-friendly framework for developing new static program analysis features for Rust. In particular, our project integrates dozens of classic program analysis algorithms, including those for pointer analysis, value-flow analysis, control-flow analysis, and more. Developers can choose and combine them like Lego blocks to achieve new bug detection or verification capabilities.

The following figure demonstrates the framework of RAP, which is composed of a core layer and an application layer. Note that the project is still under heavy development, and some modules are not available yet.

Chapter 2. Installation Guide

Quick Start

Installing from Source Code

RAP have two major modules: rap and rap-rust forked from rust master branch. They should be compiled followed by our instructions.

Building on a Unix-like system (Linux / Macintosh)

1. Make sure you have installed the dependencies:

gitninjaclang++17.0 or laterllvm17.0 or laterpython33.11 or laterz34.12 or latermake3.81 or latercmake3.27 or laterrustup1.26 or later

We do not need any version of , we will bootstrap a modified rustc or cargorustc toolchain for further use.

2. Clone the source with git:

git clone https://github.com/Artisan-Lab/RAP.git

cd rap

git submodule update --init --recursive

3. Build and install rap-rust

rap-rust is forking from the original branch of rust. We modified the source code to perform self-defined static

analysis. It must be compiled as dependencies before building rap-cargo.

Now we need to bootstrap rustc to stage2. As all we need is libstd* and librustc_*, those artifacts are from

stage2, therefore the compiler needs to be bootstrapped to stage2 to generate them.

# The script can be run directly on most unix-like systems, such as Macintosh, Linux, etc.

./00-install-rap-rust.sh

It performs the following phases:

- PHASE1: Checking operating system

- PHASE2: Checking build dependencies

rustup - PHASE3: Building, installing and linking

rap-rustintocargo# Copy config.toml to rap-rust cp -f ./config.toml ./rust/config.toml # Start Bootstrap # Using comiler/rustc due to needing rustc_*.rlib/.so cd rust && ./x.py build compiler/rustc -i --stage 2 # Link rap-rust toolchain to current rustup and cargo rustup toolchain link rap-rust build/${HOST_TRIPLE}/stage2

4. Build and install rap-cargo:

Configurations of RAP building system can be modified in Cargo.toml and 01-install-rap-cargo.sh. The build system uses a file named Cargo.toml in the root of the source tree to determine various configuration settings. Cargo.toml can option the compilation of rap and rap-cargo.

# The script can be run directly on most unix-like systems, such as Macintosh, Linux, etc.

./01-install-rap-cargo.sh

It performs the following phases:

-

PHASE1: Checking operating system

-

PHASE2: Checking working directory for

rap -

PHASE3: Checking link of

rap-rust -

PHASE4: Building, installing and linking

rapintocargoIt will install the bin

rapintocargocomponents first:# Execution self cleanup procedure cd rap && cargo clean # Build and install binary 'rap' into cargo components # For debug version of `rap` # cargo install --debug --path "$(dirname "$0")" --force --features backtraces # For release version of `rap` RUSTC_INSTALL_BINDIR=bin CFG_RELEASE_CHANNEL=nightly CFG_RELEASE=nightly cargo install --path "$(dirname "$0")" --forceThe environmental variables will be catched by srcipt automatically, including

${RAP_DIR}and${HOST_TRIPLE}.# Link to .rlib / .rmeta / .so files; for Linux export LD_LIBRARY_PATH="${RAP_DIR}/rust/build/${HOST_TRIPLE}/stage2/lib:$LD_LIBRARY_PATH" export LD_LIBRARY_PATH="${RAP_DIR}/rust/build/${HOST_TRIPLE}/stage2/lib/rustlib/${HOST_TRIPLE}/lib:$LD_LIBRARY_PATH" # Link to .rlib / .rmeta / .dylib files; for Macintosh export DYLD_LIBRARY_PATH="${RAP_DIR}/rust/build/${HOST_TRIPLE}/stage2/lib:$DYLD_LIBRARY_PATH" export DYLD_LIBRARY_PATH="${RAP_DIR}/rust/build/${HOST_TRIPLE}/stage2/lib/rustlib/${HOST_TRIPLE}/lib:$DYLD_LIBRARY_PATH" # Link libraries searching paths for rustc, by using RUSTFLAGs -L DIR export RUSTFLAGS="-L ${RAP_DIR}/rust/build/${HOST_TRIPLE}/stage2/lib"

When complete, 01-install-rap-cargo.sh will link several programs into $PREFIX/bin: rap, the rustc wrapper program for Rust Analysis Platform; rap-cargo, the subcomponent in cargo to invoke rap.

For MaOS users, you may need to manually export z3 related headers and libraries.

export C_INCLUDE_PATH=/opt/homebrew/Cellar/z3/VERSION/include:$C_INCLUDE_PATH

ln -s /opt/homebrew/Cellar/z3/VERSION/lib/libz3.dylib /usr/local/lib/libz3.dylib

Building on Windows

Note: we highly do not advice the user to use the windows as host platform.

There are two prominent ABIs in use on Windows: the native MSVC ABI used by Visual Studio, and the GNU ABI used by

the GCC toolchain.

RAP only supports for interop with GNU software built using the MinGW/MSYS2 toolchain use the GNU build.

MSYS2 can be used to easily build Rust on Windows:

- Download the latest

MSYS2installer and go through the installer. - Run

mingw64_shell.batfrom wherever you installedMSYS2 (i.e. C:\msys64). (As of the latest version ofMSYS2you have to runmsys2_shell.cmd -mingw64from the command line instead) - From this terminal, install the required tools:

# Update package mirrors (may be needed if you have a fresh install of MSYS2) pacman -Sy pacman-mirrors # Install build tools needed for Rust. If you're building a 32-bit compiler, # then replace "x86_64" below with "i686". If you've already got Git, Python, # or CMake installed and in PATH you can remove them from this list. # Note that it is important that you do **not** use the 'python2', 'cmake', # and 'ninja' packages from the 'msys2' subsystem. # The build has historically been known to fail with these packages. pacman -S git \ make \ diffutils \ tar \ mingw-w64-x86_64-python \ mingw-w64-x86_64-cmake \ mingw-w64-x86_64-gcc \ mingw-w64-x86_64-ninja - Navigate to RAP source code (or clone it), then build it.

Framework

Chapter 3.1. Code Analysis in The Frontend

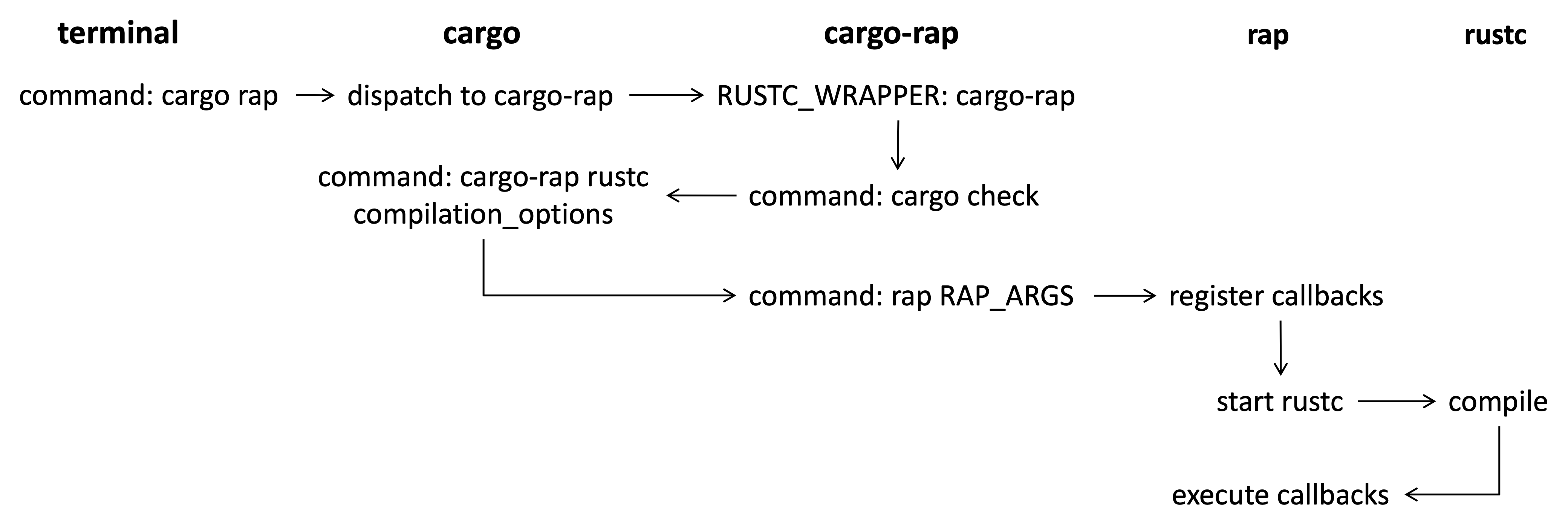

Traditionally, performing code analysis requires modifying the compiler source code to add new passes. Developers then need to recompile the compiler to activate these new passes, which can be cumbersome. The Rust compiler offers a more portable way to conduct code analysis using the rustc_driver. We refer to this approach as the frontend method because it allows developers to directly access internal compiler data and perform analysis as callbacks.

Custom Cargo Commands

To support project-level program analysis, we want the analysis tool to be integrated into cargo as subcommands. To this end, we can name the tool as cargo-toolname and place it in $CARGO_HOME/bin or $PATH. Then we can execute the tool via the following command.

cargo toolname -more_arguments

Cargo will automatically search the binaries named cargo-toolname from the paths. The following figure demonstrates the whole process before reaching our analysis program.

Note that we cannot directly invoke rap in the first round but through cargo check because we need cargo to manage the project-level compilation and append detailed compilation options for launching rustc. However, we want to hook rustc execution and execute rap instead for analysis. Therefore, we set RUSTC_WRAPPER with the value of cargo-rap. In this way, cargo check will actually run

Note that we cannot directly invoke rap in the first round but through cargo check because we need cargo to manage the project-level compilation and append detailed compilation options for launching rustc. However, we want to hook rustc execution and execute rap instead for analysis. Therefore, we set RUSTC_WRAPPER with the value of cargo-rap. In this way, cargo check will actually run cargo-rap rustc appended_options. We then dispath the execution to rap with appended options.

Register Analysis Callbacks

Supposing the purpose is to execute a function named my_analysis, developers should design a new struct and implement the Callbacks Trait for the struct.

pub struct MyCallback {...}

impl Callbacks for MyCallback {

fn after_analysis<'tcx>(&mut self, compiler: &Compiler, queries: &'tcx Queries<'tcx>) -> Compilation {

compiler.session().abort_if_errors();

queries.global_ctxt().unwrap().enter(

|tcx| my_analysis(tcx, *self) // the analysis function to execute after compilation.

);

compiler.session().abort_if_errors();

Compilation::Continue

}

}

To execute the compiler and callback function, developers can employ the APIs rustc_driver::RunCompiler provided by Rust.

let mut callback = MyCallback::default();

let run_compiler = rustc_driver::RunCompiler::new(&args, callback);

let exit_code = rustc_driver::catch_with_exit_code(move || run_compiler.run());

Chapter 3.2. Code Analysis in The Backend

Developers can add a new query to the compiler and invoke the query during compilation. This requires rebuilding the compiler source code, which may take a little while.

Add A New Query

Refering to the official Rust compiler development guide, There are two steps to add a query:

- Declare the query name, its arguments and description in the compiler/rustc_middle/src/query/mod.rs.

rustc_queries! {

query new_query(_: DefId) -> () {

desc { "a new query with novel features" }

}

}

- Supply query providers where needed in compiler/rustc_mir_transform/src/lib.rs.

pub fn provide(providers: &mut Providers) {

*providers = Providers {

new_query,

..*providers

};

}

fn new_query<'tcx>(tcx: TyCtxt<'tcx>, def_id: DefId) -> () {

...//implementation

}

Invoke The Query

In this step, we set an environment variable which is responsible for invoking the newly added query. The correponding file for managing such variables is compiler/rustc_interface/src/passes.rs.

if env::var_os("RUSTC_BOOTSTRAP").is_none() && env::var_os("NEW_QUERY").is_some() {

sess.time("new_query", || {

println!("Starting new_query...");

tcx.hir().par_body_owners(|def_id| tcx.ensure().new_query(def_id));

});

}

Chatper 4. Preliminary: Compiler Internals

Compiler Internal

We list the essential official documents to read for better understanding the internal data structures of Rust compiler.

- HIR

- MIR

- TyCtxt is the central data structure of Rust compilers. We can obtain the hir or mir of a function based on the object.

#![allow(unused)] fn main() { let hir = tcx.hir(); let mir = optimized_mir(def_id); // def_id is of type DefId }

Command to Display HIR/MIR

Execute the following command to obtain the HIR/MIR of the source code.

cargo rustc -- -Z unpretty=hir-tree

cargo rustc -- -Zunpretty=mir

Basic Modules

TODO

TODO

TODO

TODO

Heap-item Analysis (Type Analysis)

What is heap item?

Here we first give several definitions for further usage in this section. These definitions can been referred in the paper of rCanary.

- Heap Item: A heap item is an object containing the heap allocation with a fixed type (layout).

- Heap-item Unit: A heap-item unit has a

PhantomData<T>field with a typed generic parameter and a pointer field to store the valueT. - Isolated Parameter: An isolated parameter is a stand-alone and typed generic parameter (e.g.,

Tbut not&T).

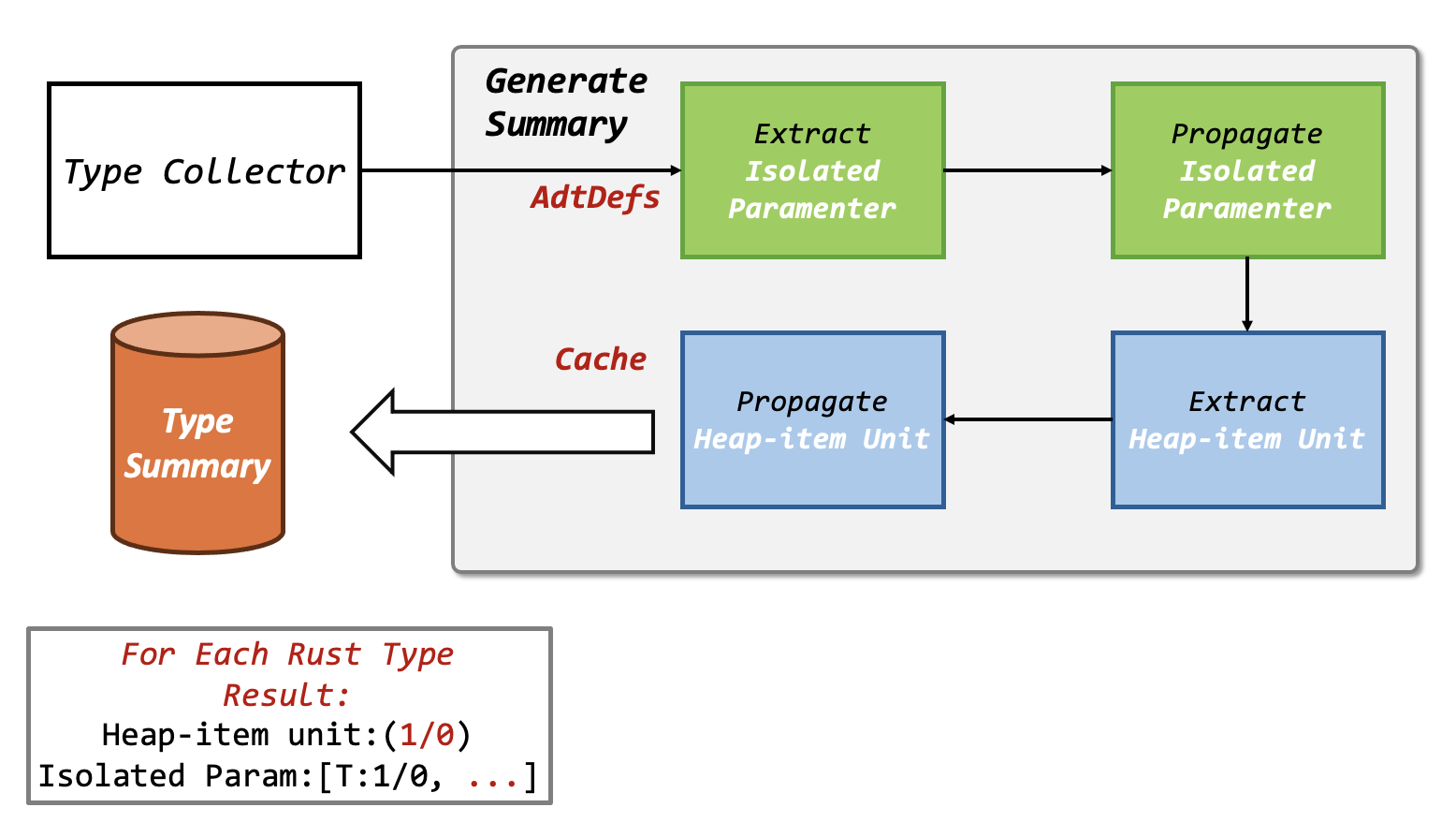

In the type analysis, we need to generate an abstraction for all rust types used in the local crate. Each type has a layout summary to illustrate the data abstraction in the fully initialized state.

The summary comprises two parts:

- a boolean value indicating if it contains a heap item;

- a boolean vector indicating if each generic parameter is an isolated parameter.

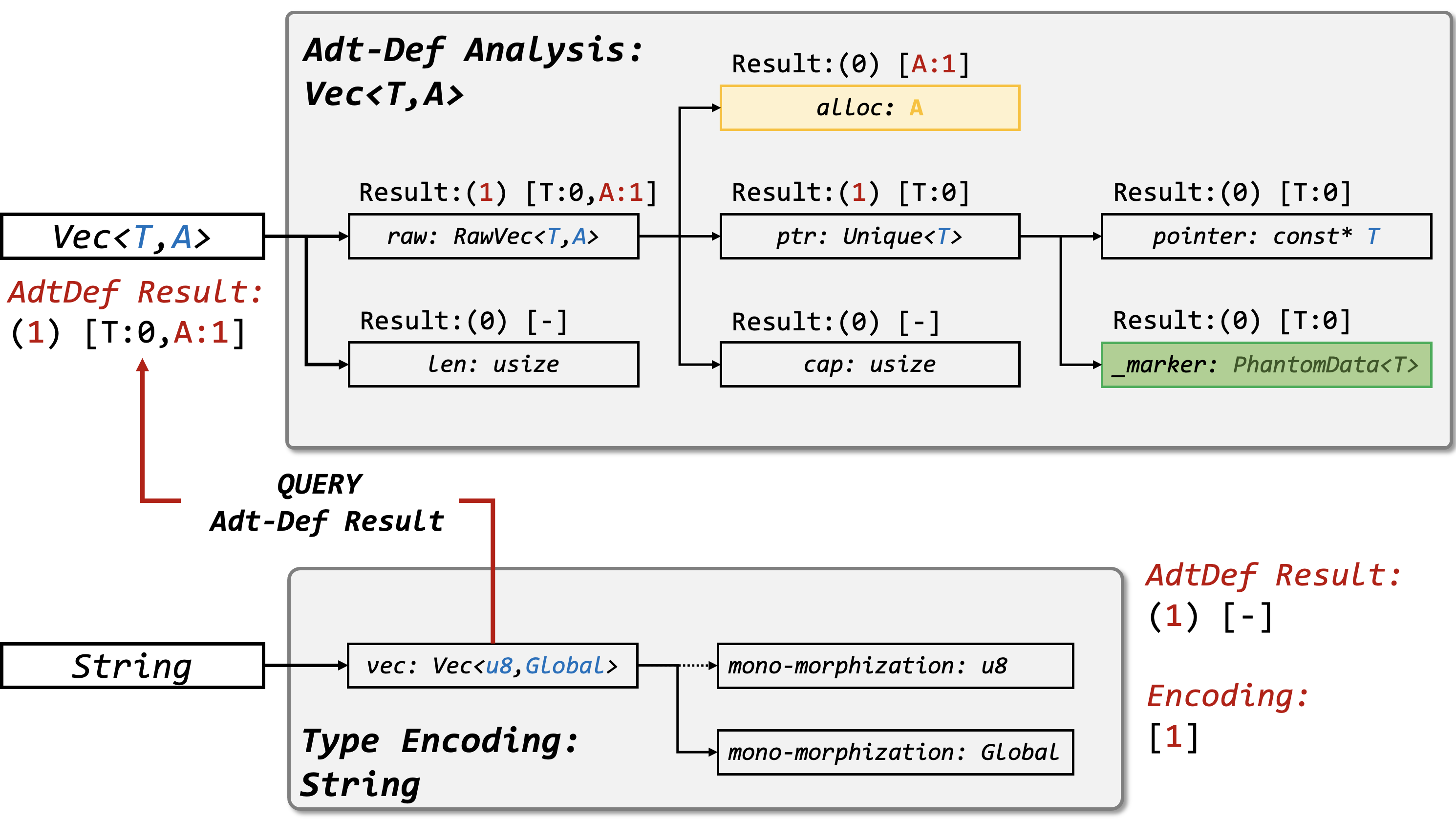

For example, the summary of the vector Vec<T,A> is (1) [0, 1].

Overview

We give the overall framework of type analysis as below.

Then we will discuss the workflow of each component in this figure.

The visitor method is main pass of the constructor part in type analysis, it will perform several important procedural

to determine whether an adt definition (adt-def) will occupy at least one heap allocation, reflecting holding heap-ownership

in RAP system.

From the top-down method of our approach, this visitor is the set of several sub-phases which means it contains multiple sub-visitors to make whole method self.visitor() work.

For example, given an AdtDef (like Vec<T>), the result of visitor contains two parts:

- pt1 Enum:

{Owned / UnOwned}indicates whether it will directly have a heap data - pt2 Array:

[bool;N]indicates whether each generic parameter will have a raw param

Those 2 parts can accelerate heap-ownership inference in the data-flow analysis.

Type Collector

We use an inter-procedural visitor to collect all the types for local crate and the types are after monomorphism. Our goal is to extract all the AdtDefs.

The struct TypeAnalysis implements mir::Visitor to simulate as the type collector.

#![allow(unused)] fn main() { pub struct TypeAnalysis<'tcx, 'a> { rcx: &'a mut RapGlobalCtxt<'tcx>, fn_set: Unique, ty_map: TyMap<'tcx>, adt_recorder: Unique, } }

Note: the type in this phase is

Ty::tyrather ofHir::ty.

As we can see, the function visit_ty will flatten all the fields and generics appeared. This procedural can cover almost all the rust types.

#![allow(unused)] fn main() { impl<'tcx, 'a> TypeAnalysis<'tcx, 'a> { fn visit_ty(&mut self, ty: Ty<'tcx>, ty_context: TyContext) { match ty.kind() { TyKind::Adt(adtdef, substs) => { // ... for field in adtdef.all_fields() { self.visit_ty(field.ty(self.tcx(), substs) ,copy_ty_context(&ty_context)) } for ty in substs.types() { self.visit_ty(ty, copy_ty_context(&ty_context)); } }, TyKind::Array(ty, ..) => { self.visit_ty(*ty, ty_context); }, TyKind::Slice(ty) => { self.visit_ty(*ty, ty_context); }, TyKind::RawPtr(typeandmut) => { let ty = typeandmut.ty; self.visit_ty(ty, ty_context); }, TyKind::Ref(_, ty, ..) => { self.visit_ty(*ty, ty_context); }, TyKind::Tuple(tuple_fields) => { for field in tuple_fields.iter() { self.visit_ty(field, copy_ty_context(&ty_context)); } }, _ => return, } } } }

The AdtDef are finally stored in the field adt_recorder. After the collecting all AdtDef, we have 4 phases to generate the summary for each type.

#![allow(unused)] fn main() { start_channel(|did| self.extract_isolaoted_parameter(did), &dids); start_channel(|did| self.extract_isolaoted_parameter_prop(did), &dids); start_channel(|did| self.extract_heap_item_unit(did), &dids); start_channel(|did| self.extract_owner_prop(did), &dids); }

Extract Isolated Parameters

Extract params in adt types, the param means one generic parameter acting like T, A, etc...

In the sub-visitor IsolatedParameter, it will visit the given type recursively, and extract all params to construct a mapping.

#![allow(unused)] fn main() { #[derive(Clone)] struct IsolatedParameter<'tcx> { tcx: TyCtxt<'tcx>, parameters: Parameters, } }

Note we only interest in isolated params (T not like *mut T). It lies in one-entire field | recursive in tuple | recursive in array | mixed before.

The implementation can be found in function extract_raw_generic and trait impl impl TypeVisitor for IsolatedParameter.

Here, we give some examples of extracting isolated parameters. Given a struct Example<A, B, T, S>:

#![allow(unused)] fn main() { struct Example<A, B, T, S> { a: A, b: (i32, (f64, B)), c: [[(S) ; 1] ; 2], d: Vec<T>, } }

The final result for <A, B, T, S> is <true, true, false, true>.

Propagate Isolated Parameter

Extract all params in the adt types like param T and then propagate from the bottom to top.

This procedural is the successor of extracting isolated parameters, and the main idea of IsolatedParameterPropagation is to propagate params from bottom AdtDef to the top as well as updating TypeAnalysis Context.

Note that it will thorough consider mono-morphization existed in the AdtDef. That means the type Vec<T>, Vec<Vec<T>> and Vec<i32> are totally different!!!!

Given a struct Example<A, B, T, S>:

#![allow(unused)] fn main() { struct X<A> { a: A, } struct Y<B> { a: (i32, (f64, B)), b: X<i32>, } struct Example<A, B, T, S> { a: X<A>, b: (i32, (f64, B)), c: [[(S) ; 1] ; 2], d: Vec<T>, } }

The final result for X<A> is <true>, for Y<B> is <true>, and for Example<A, B, T, S> is <true, true, false, true>.

Extract Heap-item Unit

Next, we need to extract all types that has PhantomData<T> inside which T must be a isolated parameter. Consider these types as a unit to guide the traversal over adt types.

As for one heap-item unit, only struct will contains the information that we want like

#![allow(unused)] fn main() { // Example: struct Foo<T> { NonNull<T>, // this indicates a pointer PhantomData<T>, // this indicates a ownership } }

Propagate Heap Item Result

Sample Applications

SafeDrop

What is SafeDrop?

Rust is an emerging programming language that aims to prevent memory-safety bugs. However, the current design of Rust also brings side effects, which may increase the risk of memory-safety issues. In particular, it employs OBRM (ownership-based resource management) and enforces automatic deallocation of unused resources without the garbage collector. It may therefore falsely deallocate reclaimed memory and lead to use-after-free or double-free issues.

We study the problem of invalid memory deallocation and propose SafeDrop, a static path-sensitive data-flow analysis approach to detect such bugs. Our approach analyzes each API of a Rust crate iteratively by traversing the control-flow graph and extracting all aliases of each data-flow. To guarantee precision and scalability, we leverage a modified Tarjan algorithm to achieve scalable path-sensitive analysis, and a cache-based strategy to achieve efficient inter-procedural analysis.

Our experiment results show that our approach can successfully detect all existing CVEs of such issues with a limited number of false positives. The analysis overhead ranges from 1.0% to 110.7% in comparison with the original compilation time. We further apply our tool to several real-world Rust crates and find 8 Rust crates involved with invalid memory deallocation issues.

How SafeDrop works?

This section will demonstrate the execution process of SafeDrop using a test case, linking the detection process to the main code of SafeDrop.

POC

For the test case, we chose the same PoC as in the paper to facilitate user understanding and testing:

fn genvec() -> Vec<u8> { let mut s = String::from("a_tmp_string"); let ptr = s.as_mut_ptr(); let v; unsafe { v = Vec::from_raw_parts(ptr, s.len(), s.len()); } // mem:: forgets); // do not drop s // otherwise, s is dropped before return return v; } fn main() { let v = genvec(); // use v -> use after free // drop v before return →> double free }

SafeDrop Query

First, in the RAP backend, we embedded a Query in the compiler called SafeDrop.

This Query can be enabled through a command line parameter in the frontend, located in the file compiler/rustc_interface/src/passes.rs:

#![allow(unused)] fn main() { if env::var_os("RUSTC_BOOTSTRAP").is_none() && env::var_os("SAFEDROP").is_some() { sess.time("safedrop_check", || { tcx.hir().par_body_owners(|def_id| tcx.ensure().safedrop_check(def_id));}); } }

The environment variable "SAFEDROP" is set by the front end, while "RUSTC_BOOTSTRAP" is used to prevent calling this query during Rustc's bootstrap process. It should only be used when analyzing third-party programs.

We have inserted the query code into compiler/rustc_mir_transform/src/lib.rs. This query's function body is called whenever Rust analyzes each function.

#![allow(unused)] fn main() { fn safedrop_check<'tcx>(tcx: TyCtxt<'tcx>, def_id: DefId) -> () { if let Some(_other) = tcx.hir().body_const_context(def_id.expect_local()){ return; } if tcx.is_mir_available(def_id) { rap_info!("{:?}", def_id); let body = tcx.optimized_mir(def_id); let mut func_map = FuncMap::new(); let mut safedrop_graph = SafeDropGraph::new(&body, tcx, def_id); safedrop_graph.solve_scc(); safedrop_graph.safedrop_check(0, tcx, &mut func_map); if safedrop_graph.visit_times <= 10000{ safedrop_graph.output_warning(); } else{ rap_error!("SafeDrop: Over_visited: {:?}", def_id); } } } }

Data Structure

For SafeDrop, the data structure SafeDropGraph<'tcx> maintains the context of the analysis results.

Our analyzer constructs an instance for each analyzed function, the definition is provided as follows:

#![allow(unused)] fn main() { pub struct SafeDropGraph<'tcx>{ pub def_id: DefId, pub span: Span, // contains all varibles (including fields) as nodes. pub nodes: Vec<Node>, // contains all blocks in the CFG pub blocks: Vec<BlockNode<'tcx>>, pub arg_size: usize, // we shrink a SCC into a node and use a father node to represent the SCC. pub father_block: Vec<usize>, // record the constant value during safedrop checking. pub constant_bool: FxHashMap<usize, usize>, // used for tarjan algorithmn. pub count: usize, // contains the return results for inter-procedure analysis. pub return_results: ReturnResults, // used for filtering duplicate alias assignments in return results. pub return_set: FxHashSet<(usize, usize)>, // record the information of bugs for the function. pub bug_records: BugRecords, // a threhold to avoid path explosion. pub visit_times: usize } }

Next, we will mainly discuss several important functions of SafeDropGraph<'tcx>. It is evident that the processing logic in the query is quite simple, with two key methods: solve_scc and safedrop_check.

Graph Traversal

#![allow(unused)] fn main() { safedrop_graph.solve_scc(); safedrop_graph.safedrop_check(0, tcx, &mut func_map); }

Bug Reporter

Type Filter

rCanary

What is rCanary?

Rust is an effective system programming language that guarantees memory safety via compile-time verifications. It employs a novel ownership-based resource management model to facilitate automated deallocation. This model is anticipated to eliminate memory leaks.

However, we observed that user intervention drives it into semi-automated memory management and makes it error-prone to cause leaks. In contrast to violating memory-safety guarantees restricted by the unsafe keyword, the boundary of leaking memory is implicit, and the compiler would not emit any warnings for developers.

We present RCANARY, a static, non-intrusive, and fully automated model checker to detect leaks across the semi-automated boundary. We design an encoder to abstract data with heap allocation and formalize a refined leak-free memory model based on boolean satisfiability. It can generate SMT-Lib2 format constraints for Rust MIR and is implemented as a Cargo component. We evaluate R C ANARY by using flawed package benchmarks collected from the pull requests of open-source Rust projects. The results indicate that it is possible to recall all these defects with acceptable false positives.

We apply our tool to more than 1,200 real-world crates from crates.io and GitHub, identifying 19 crates having memory leaks. Our analyzer is also efficient, that costs 8.4 seconds per package.

How rCanary rCanary?

This section will demonstrate the execution process of rCanary using a test case, linking the detection process to the main code of rCanary.

POC

For the test case, we chose the same PoC as in the paper to facilitate user understanding and testing:

fn main() { let mut buf = Box::new("buffer"); // heap item ’buf’ becomes an orphan object let ptr = &mut * ManuallyDrop::new(buf) as * mut _; // leak by missing free operation on ’ptr’ // unsafe { drop_in_place(ptr); } }

Front-end Entry

First, in the RAP front-end, we give an entry in the source file.

#![allow(unused)] fn main() { impl Callbacks for RapCompilerCalls { fn after_analysis<'tcx>( &mut self, compiler: &Compiler, queries: &'tcx Queries<'tcx>, ) -> Compilation { compiler.session().abort_if_errors(); Verbosity::init_rap_log_system_with_verbosity(self.rap_config.verbose()).expect("Failed to set up RAP log system"); rap_info!("RAP Start"); queries.global_ctxt().unwrap().enter( |tcx| start_analyzer(tcx, self.rap_config) ); rap_info!("RAP Stop"); compiler.session().abort_if_errors(); Compilation::Continue } } }

This function is an implementation of the Callbacks trait in Rustc, where the after_analysis method is called after the compiler completes MIR analysis of the local crate. We use this function to intercept Rustc's metadata.

The start_analyzer function defines all the analysis processes of rCanary, with two important steps being TypeAnalysis and FlowAnalysis, corresponding to the ADT-DEF Analysis and constraint construction, and constraint solving described in the paper.

We will introduce the internal implementation in the following sections.

#![allow(unused)] fn main() { pub fn start_analyzer(tcx: TyCtxt, config: RapConfig) { let rcx_boxed = Box::new(RapGlobalCtxt::new(tcx, config)); let rcx = Box::leak(rcx_boxed); if config.is_rcanary_enabled() { run_analyzer( "Type Analysis", || TypeAnalysis::new(rcx).start() ); run_analyzer( "Flow Analysis", || FlowAnalysis::new(rcx).start() ); } } }

unsafety propogation and isolation graphs

The unsafety propogation graph(UPG) is a novel method to model the essential usage and encapsulation of unsafe code. UPG combines the traditional call graph with unsafe and dataflow information from Rust to capture the use and propagation of unsafe code across the project.

Within the UPG, there contains four major isolation types and nine structural patterns to split a UPG into several small self-contained subgraphs. These subgraphs called unsafety isolation graphs(UIGs) can serve as useful audit units for examining the soundness of unsafe code encapsulation.

We will continue to explore and investigate more effective applications of UIG in the encapsulation of unsafe code in our subsequent research.

Before using this feature, make sure that graphviz is installed on your device

usage

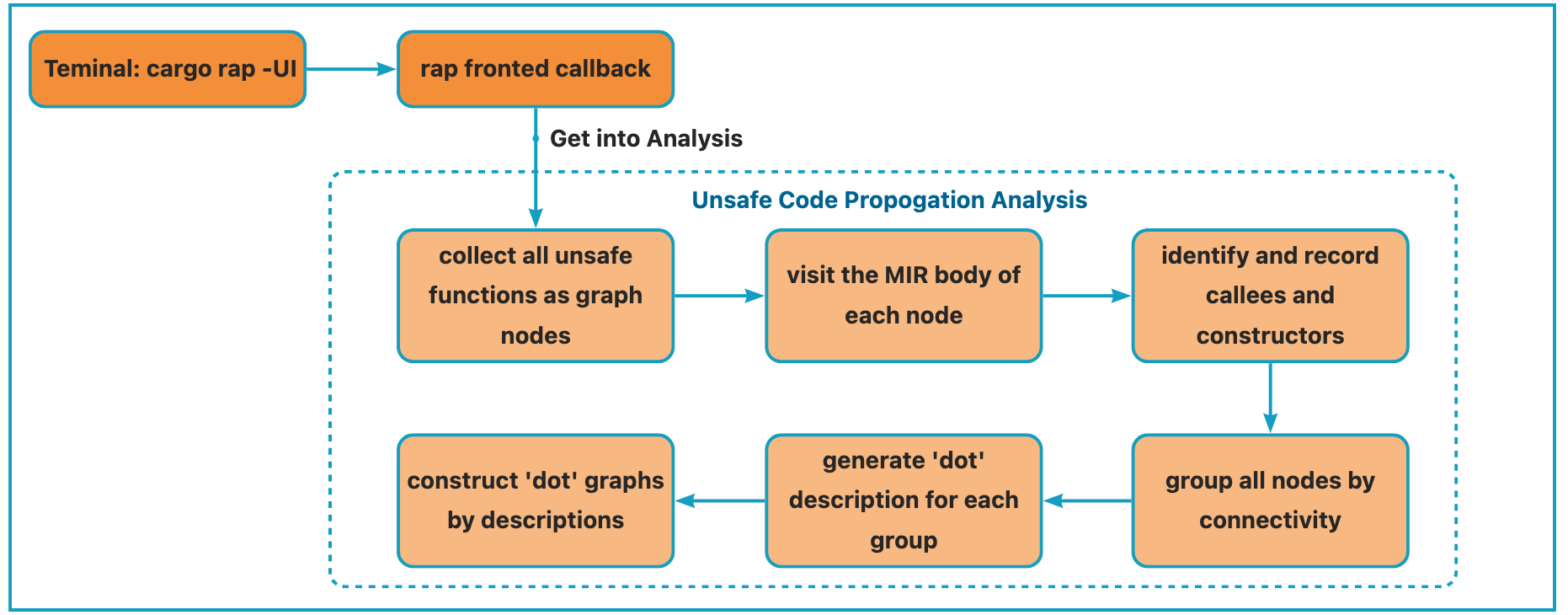

Get into the same directory as cargo.toml and run the cmd below from the teminal. Then 'rap' will create a directory named 'UPG' in the same level, which contains several connected unsafety propogation graphs.

cargo rap -UI

framework

The overall framework of this feature is consistent with rap's frontend framework. See the picture below for details.